Driving human emotions

Driving human emotions

February 17, 2014

Driving can be a very stressful activity, especially in Chicago. But what if cars could detect a driver’s emotional state and possibly prevent an accident?

This is the goal for a team of researchers from the Affective Computing Research Group at Massachusetts Institute of Technology’s Media Lab.

Currently in the development stages, Media Lab’s AutoEmotive project is designed to create an empathetic vehicle that can detect a driver’s mood by using analytical data acquired through sensor technology.

The car would contain strategically placed sensors in places like the steering wheel and door handle that would detect the driver’s emotional state through the use of electrodermal response measurements—the amount of electric signals that come from the skin. The car would also include a camera mounted on the dashboard that would analyze facial expressions.

“Basically this will change the behavior of the car to create a more empathetic experience,” said Javier Hernandez, project leader and MIT researcher. “If you have all of these measurements of stress, then you can start aggregating all of this information from different drivers and hopefully use this information in positive ways.”

The data gathered by the sensors would allow the vehicle to respond to different emotions using certain applications, such as softening headlights to correct tunnel vision or turning on music that would keep a drowsy driver from falling asleep at the wheel. The sensors would also be able to change the color of a car to alert other motorists when the driver is in a stressed state, which may help alleviate road rage or prevent accidents.

While the car is not ready for mass production, Hernandez said the team is working to combine detecting a range of human emotions with different types of sensors.

“Right now computers don’t really have this information,” Hernandez said. “So we believe that by adding emotion-sensing technology, we can create much more compelling artificial intelligence that can better understand our feelings and better connect with us.”

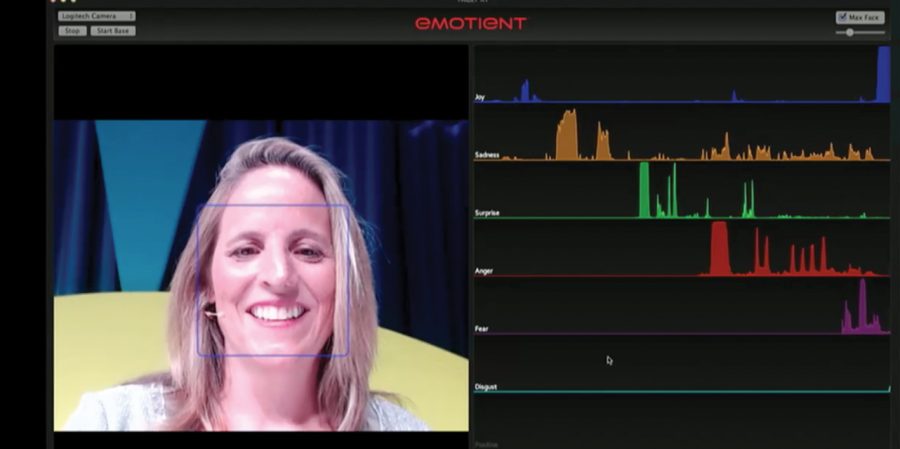

Facial recognition is the most progressive method of affective technology, a movement led by companies like Emotient, developer of facial expression recognition and analysis technologies and software.

Founded in 2012 by a group from the University of California, San Diego, the company is focused on providing automatic facial recognition technology that uses camera-enabled devices to detect facial muscle movements and process them to identify emotions. The company’s software can classify emotions, like joy and anger.

The retail and market research industries are only two of many areas in which the technology can be used, according to Vikki Herrera, vice president of corporate marketing at Emotient.

Herrera said emotion-detecting technology lends itself to market research because it allows companies to determine how customers truly feel about a product.

“When somebody fills out a survey at the end of experiencing a product, sometimes they only tell you what you want to hear,” Herrera said. “By monitoring and processing their emotional reaction as they’re experiencing a new product, we are able to detect their true reaction.”

The technology can also be used in the health care industry.

According to Herrera, the company has done extensive research in using facial expression analysis to help treat autism. She said games have been developed that allow children with autism to mimic facial expressions to better express themselves and recognize and understand facial expressions in others, helping them to communicate. Research has also been done to use the technology to monitor certain mental illnesses such as depression as therapy sessions start to gravitate toward video-related methods.

“It could be used as an output that would give interesting information that might say if somebody was feeling sad or depressed or exhibiting signs of these emotions that might cause for further diagnosis,” Herrera said.

Much of the new technology is geared toward non-contacting sensors, sparing users from wearable devices and creating more natural environments.

But that specific emotion-sens-ing technology may not be as easy to develop; however, combining various types of sensors could spur advancements in affective computing, according to Philip Troyk, professor in the Department of Biomedical Engineering at the Illinois Institute of Technology.

“The problem is we would like to have things that are in Star Trek, but we don’t,” Troyk said. “But when you combine different sensors, then you can get a more positive indication of a person’s emotions.”