New reading comprehension model scans between lines

Novel brain

December 8, 2014

Reading and language comprehension are more complicated than scientists once thought.

Understanding words spoken or read on a page has historically been linked to two places in the left hemisphere of the brain—Broca’s area in the frontal lobe and Wernicke’s area in the cerebral cortex.

More recently, research has suggested that the networks responsible for language processing through reading and speech vary widely among individuals. Different and sometimes unexpected parts of the brain are engaged as comprehension takes place.

A group of researchers from Carnegie Mellon University in Pittsburgh constructed the first integrated computational model of reading, identifying which parts of the brain are active when breaking down sentences, determining the meaning of a text and understanding the relationships between words. Story comprehension itself is a complex process that combines these elements to form a lucid understanding of the text, according to the study, published Nov. 26 in the journal PLOS ONE.

“Some of [the regions] were expected, some were a bit different or you wouldn’t really expect to see them while you’re reading,” said Leila Wehbe, lead author of the study and a Ph.D. student in the Machine Learning Department at Carnegie Mellon University. “The visual regions were expected and some of the language regions were expected, but … no one has reported this much right hemisphere representation of syntax or grammar.”

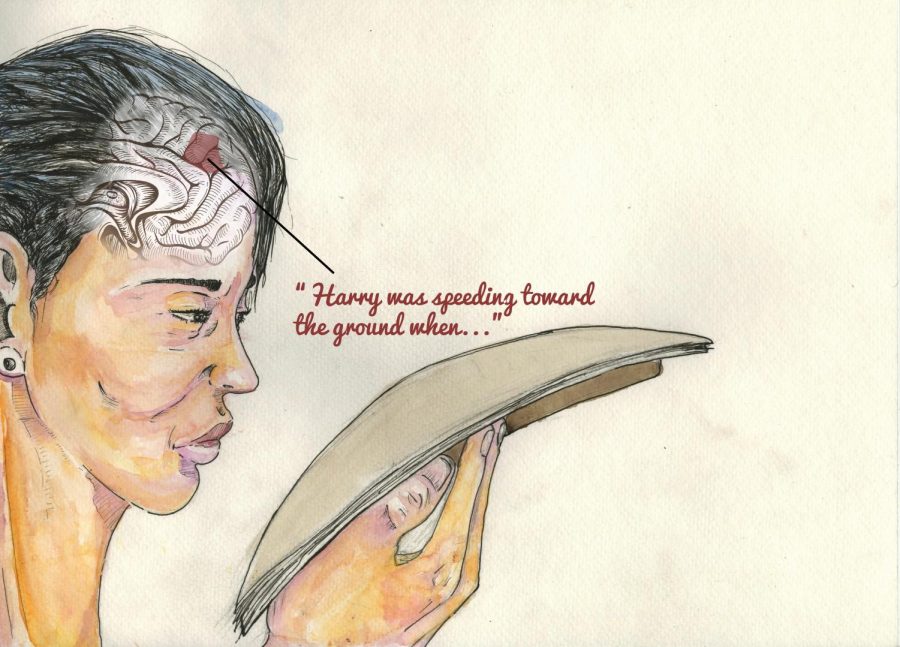

Researchers used a functional MRI to document what happened in the brains of participants while they read a chapter from J.K. Rowling’s “Harry Potter and the Sorcerer’s Stone.” The scans, each representing a four-word segment of that chapter, were then analyzed to compile a model of the cognitive subprocesses that simultaneously occur when reading. The researchers established 195 identifying features for each word read.

Using this data, a machine-generated learning algorithm was able to associate features from individual words with regions of the brain pinpointed during the scans. The algorithm performed efficiently enough to allow the computer to differentiate between which of two distinct passages were being read with 74 percent accuracy based on scans alone.

“We were not sure that it was going to work,” Wehbe said. “We tried annotating the text with all these rich features and … it turned out you could [differentiate]. You could actually guess which part of the story out of the two passages was being read just from this noisy brain data.”

Wehbe noted that regions participants use in everyday life to interpret the intent of individuals they interact with were also activated when reading about and interpreting the motivations and qualities of characters in a story. Similarly, the parts of the brain associated with processing motion were activated when participants read passages about movement.

“What we’re starting to learn is that the brain responds to fictional content in text in quite similar ways that it would respond to actual content out in the real word,” said Raymond Mar, an associate professor of psychology at York University in Ontario. “So there’s this idea of embodied cognition where even abstract concepts are represented within very simple motor perception networks in the brain.”

According to Mar, the representation of human psychology in fiction acts as an entry point into those worlds, which might account for why people are largely less attracted to non-fiction, Mar said. Research seems to point toward a relationship between people’s engagement with narrative fiction and their social abilities, Mar said.

“It’s not surprising that it shouldn’t just be two little areas of the brain but actually much, much more,” said Ted Gibson, professor in the Department of Brain and Cognitive Science at the Massachusetts Institute of Technology. “We don’t really understand at all what exactly those [extensive] networks are doing.”

Massively overlapping networks process comprehension during both reading and listening, Gibson said. Understanding how they represent the sounds of words, their meaning, their order in a sentence and how they fit into a discourse is difficult to tease apart.

“Just imagine the task of understanding language,” he said. “You could be reading or listening about anything … where we have to have compositional meaning of complicated ideas is in that network.”

The same approach that created the model of reading could be used to produce specific brain maps for individuals to help determine the cause of reading or processing difficulties and identify differences between the ways people learn to read and understand stories, according to the study.

“Different people are going to have different maps,” Wehbe said. “The idea is that if we characterize what is systematically different between the brains of good readers and bad readers—whether there are regions that are more efficient or smaller or larger or are working in a different way—because of the brain scan you’d find which region is different from a healthy subject.”